|

VMWare has recently made their virutal machine software Fusion free-for-personal-use. The current version also supports OpenGL 4.2, which is more than what Expresii requires. We gave a shot on running Expesii using it. The good news is that Expresii runs without error. However, one hurdle is that graphics tablets are not plug-and-play. We finally got tablet input working using another piece of softwar called USB Network Gate: And here is how everything working together: Performance is only 30-40 FPS on a M1 Mac Book Air, not as good as using Parallels Desktop. Anyway, this is still one option you can use Expresii on a modern Mac with an ARM chip. We hope VMware continued to imprve the performance and also the USB device pass-through.

Comments

We took part in the Hong Kong Illustration and Creative Show 2024 Spring, giving some talk or demonstrattion at the CGLive booth. During his talk, our Dr. Nelson Chu showed a little sneak peak of what he's working on for simulating Western watercolor. We are lucky to have Sensei Shuen to give a demonstration at the booth. You can learn a lot from her on ink painting and the use of Expresii: This piece "劔舞乾坤" (sword dance) is spirited. The subject's pose, the composition, the linework, the contrast all contributed to the energetic look of it. Find out how it's done in the above video! And here's the calligraphy our Dr. Nelson Chu did as inscription for the painting: The all new WACOM Movink was on pre-oder at the show. It's among the few places where you can try the new hardware first hand. Pretty good price at HKD5889 there (compared to the offcial price in another region next to Hong Kong)! It's really thin and light. Once again, we were honored to have Sensei Shuen give an ink painting demo at the Hong Kong Illustraion and Creative Show last weekend. This time we use the new Wacom Cintiq Pro 22: Watch the process: Your brush is really a brush, not some 2D stamps! We also offered a free Ink painting class. Within the one-hour class, Sensei Shuen taught us how to paint simple subjects like punkin, tangerine, and wisteria. We are amazed by students' interesting designs! Gradually, we're making digital ink painting more popular. We thank CGLive for organizing the class!

In 2014, we tried our hands on a USD150 8" Windows tablet. Our watercolor flows on such a device, but the Atom-based CPU is rather weak to give a very smooth run. In recent years, more and more handheld gaming devices emerged including quite some running Windows. Among them is the OneXPlayer 2 - one that supports stylus input, touch and its game controllers are detachable, making it possible to be used as an 8" tablet PC! A few years back, many of those handheld PC's from GPD or OneNetbook use Intel CPU's. They are not bad for every-day tasks, but when it comes to integrated GPU performance, those Intel chips were not as good as those from AMD. Since the last couple of years, thanks to the booming mobile gaming industry, there's a wave of AMD-based gaming handheld coming out, the most recent one being the Lenovo's Legion Go. Today we got hold of a OneXPlayer 2 with AMD 6800u and 32GB RAM to test Expresii on. This is last-year's model, and they have a refresh called the OneXPlayer 2 Pro using the AMD 7840U. In our tests, the 6800u OneXPlayer 2 can run Expresii smoothly even at 15W max. The device is not primarily designed for painting, or using the pen in general, and we would suggest a few improvements to the design of the system if OneNetbook, the company behind OneXPlayer, wish to cater for pen usage more: 1. when the machine is used like a laptop with the official keyboard attached, the pen stroking on the upper corners of the screen would make the device wobble. 2. device cannot be used like a tablet lying flat on the table, since the air intake grille is on the back. To fix 1, the kickstand can be made wider like the Surface Pro or the latest Lenovo Legion Go. To fix 2, rubber feet could be added, just like for a normal laptop. For the current users, a makeshift could be mobile phone kickstands that stick to the back of the device. Use ones that adds 4 mm or more, so that they double as feet for lying the device flat. The OneXPlayer 2 is a bit heavy at 719g and clunky to hold single-handed. The heat it produces along with fan noise can also be annoying. The model we have has an APU 6800u produced on 6nm process. With the latest models with APU 7840u or 7640u, which are made on 4nm, the heat is reduced and battery life prolonged. Once again, the gaming industry has brought about hardware trend that also benefits us as artists. BTW, It's interesting that OneNetbook recently announced the OneMix 5, one that looks like a mini Surface Laptop Studio that ues a "Z-fold" design, and the first video on this device on their official Bilibili channel is on painting. Looks like they are also interesting in the digital artist market. We have invited Sensei Shuen to demo ink painting at the Hong Kong Illustration and Creative Show on 26 June 2023. Listen to Sensei Shuen (in Cantonese, with English subtitle) as she paints, with tips in using Expresii and WACOM hardware: Thanks for visiting! Hopefully more people are aware of our invention and effort in digital painting development. The spec of Windows machine used for the demo: Basically, you need a good GPU (graphics card) to run Expresii smoothly. e.g. an nvidia 1060 with 6GB vRAM is good for running Expresii smoothly on a 4K monitor. If you're only using a full-HD monitor, you can go by with modern integrated GPU like Intel Iris Xe. For example, the Microsoft Surface Pro 7 (i7) or 8 (i5) can already run Expresii smoothly on their own 2.5k monitor.

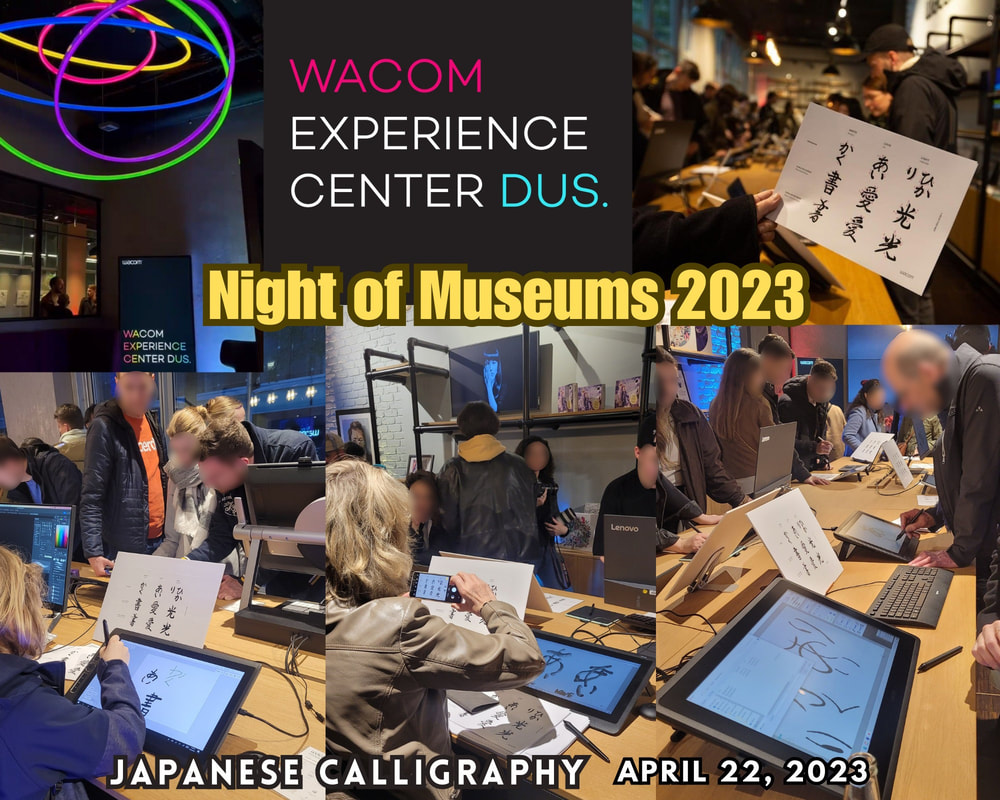

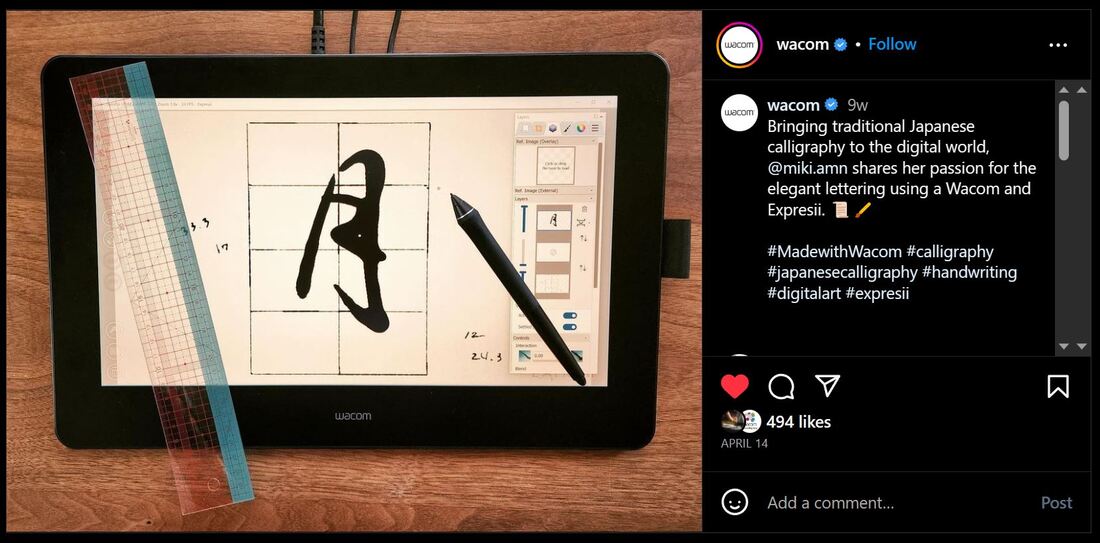

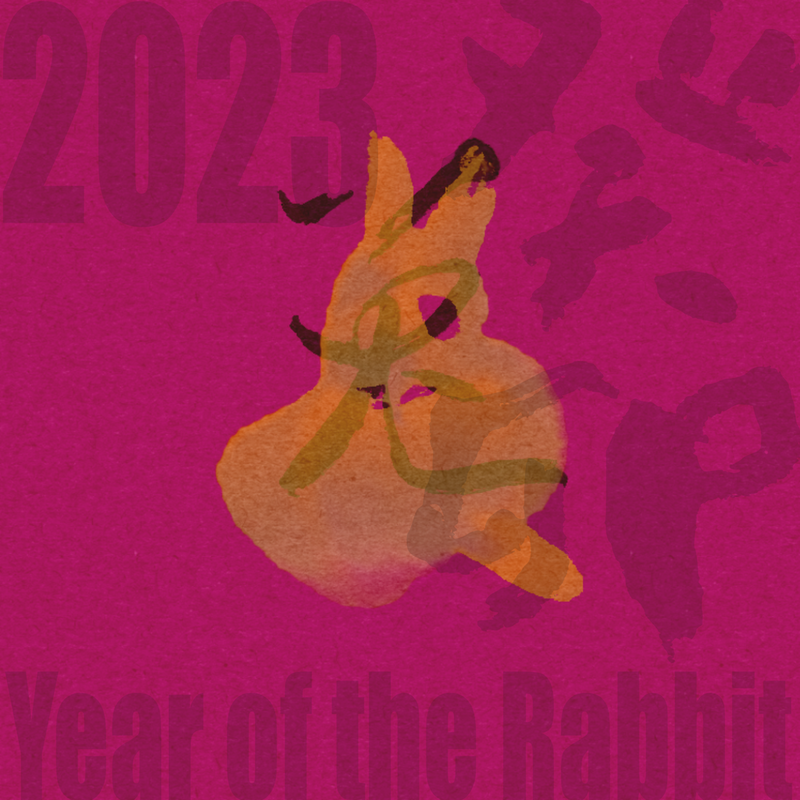

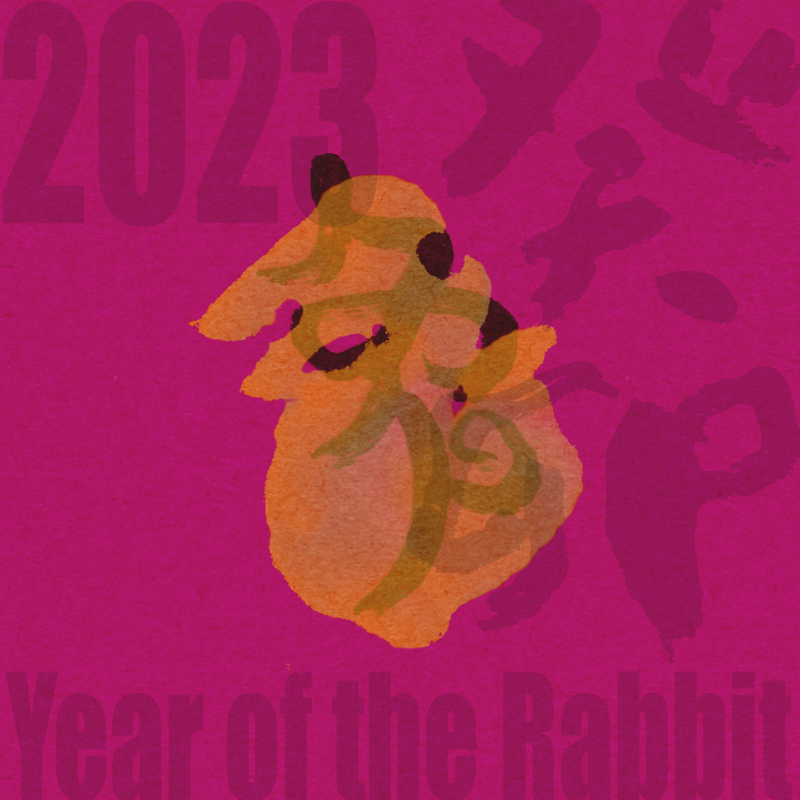

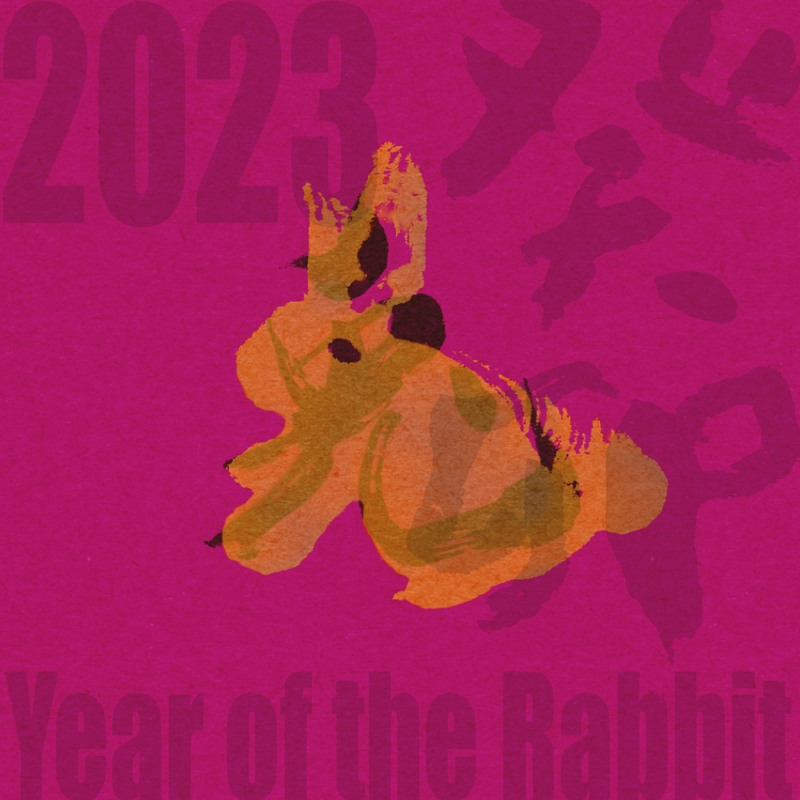

We thank CGLive and Wacom Hong Kong for their support! I was told that recently Japanese calligraphy, or just Japanese culture in general, is much appreciated in Germany and Wacom has been showing Expresii for calligraphy in their German Experience Center in the past few months in events like "Night of Museums", or "Japan Day": Wacom official artist Miki Amano is also showing the public the use of Expresii with Wacom tablets for digital calligraphy, with sample works including their official tagline written in Chinese. What's the deal with Eastern Calligraphy?In China, calligraphy has been the basic training for painting - it lets the artist acquire the dexterity for controlling the brush. Also, calligraphy is inherently an abstract art form. Arguably, cursive calligraphy is considered the highest form of art in China. No wonder why Pablo Picasso said "Had I Been Born Chinese, I Would Have Been A Calligrapher, Not A Painter." 筆有千秋業 - the way of the brush is passed down from generation to generation. And it still develops. We did some demos for the Year of the Rabbit 2023 using a Wacom tablet. The great thing about using Expresii is that it gives you organic quality of real brush and ink, but yet you can always undo and redo. 書法 配圖 設計: Finally, we made a video on Why Expresii is good for improvised sketching and more (in English, with a little intro in Chinese), which includes quotes from some artists: Ink art is nothing if there's no happy accident. We're very happy to see our Expresii app being paired with Wacom's hardware at the booth of CG Live at the Hong Kong Illustration And Creative Show (HKICS) 2022 held on 26-27th Nov 2022. This two-day event was short but packed with visitors. The HKICS has quickly become a major event for creatives held in Hong Kong. 日前我們因CGLive 有幸參與香港插畫及文創展2022,見到很多對繪畫創作有興趣的人士,超熱鬧der ~ 這展雖然歷史不長,但很快成為了香港文創界的盛事! We thank CG Live for having Expresii in some of the demo machines there in their booth. They got Wacom + Expresii bundle promotion for this event. Sensei Shuen also attracted viewers when she demo'ed using Expresii to paint on the latest Wacom Cintiq Pro 27. 感謝 CG Live 安裝Expresii 給參展人士試玩!他們還給了會場限定 Wacom + Expresii 組合優惠呢。璇老師除了下面說的入門班外,還在CG Live 攤位示範使用Expresii,也吸引了觀眾駐足觀看! Sensei Shuen's demo on the Wacom Cintiq Pro 27 大家看看璇老師的數碼水墨示範: 【Wacom Classroom 教室】之 Ink Painting Class 電腦水墨課Wacom has been sponsoring the Show and this time, they also sponsored the 'Wacom Classroom‘ as one of the activities in the Show. We were glad to have Sensei Shuen teach two introductory classes on using Expresii to do digital ink paintings here. Sensei Shuen has been using Expresii for digital ink painting since very early days of Expresii. Before working for an animation studio from 2017 to 2020, Shuen had been teaching kung-fu and extracurricular painting to school children , as well as doll making and manga making to adults. We hope all the students enjoyed the class. Wacom 近年都有贊助這文創展,這次也包括贊助了‘Wacom 教室’活動。我們也有幸在這活動請到璇老師來教兩堂入門電腦水墨課。璇老師使用Expresii 軟件非常熟練,她由Expresii一開始beta 已經在用。老師的教學經驗也豐富:2017-2020 為一家動畫公司服務時,有負責教junior animator 使用Expresii 作畫;在此之前,老師也有教小孩打功夫,教小學生課外繪畫班,和教人偶製作與漫畫製作。璇老師教得很用心,希望所有學員都玩得開心~ A big thanks to CG Live for having us at the booth and also organizing the Wacom class! We also thank the organizer HKHands for organizing this show! Hope we can joint more forces to promote digital painting and calligraphy!

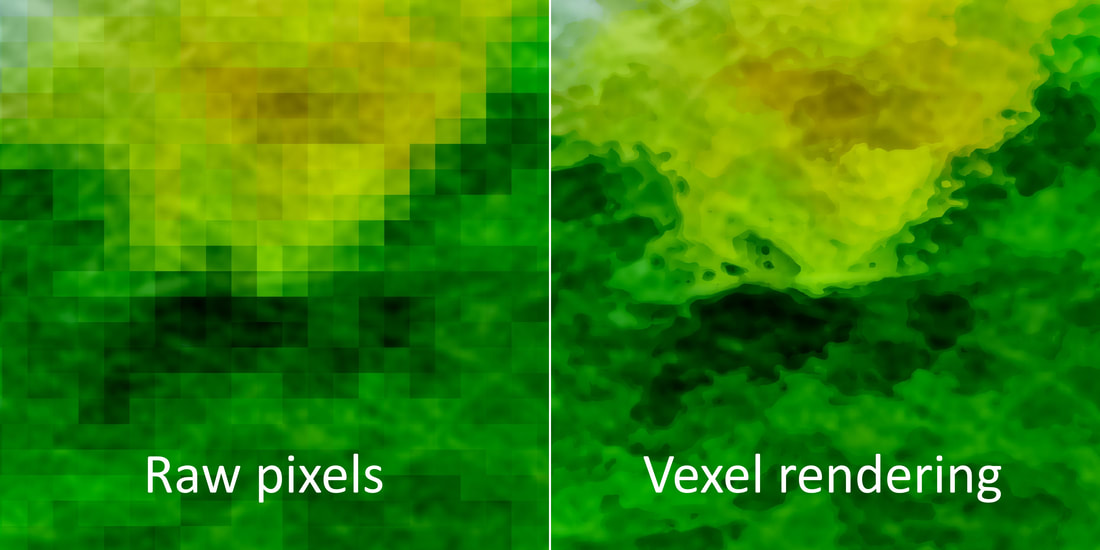

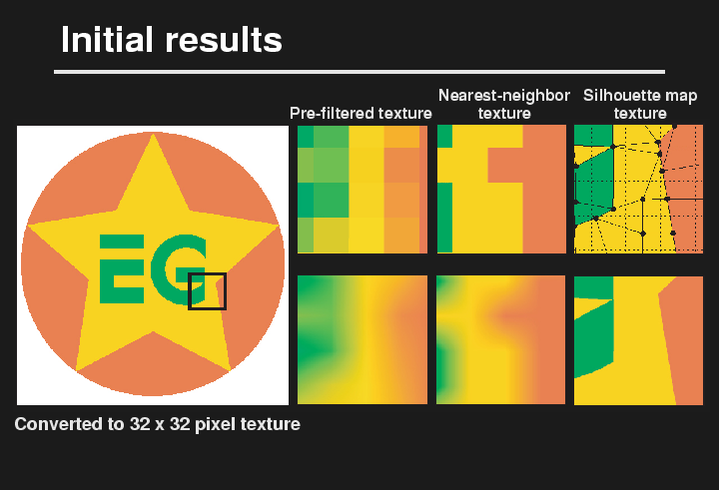

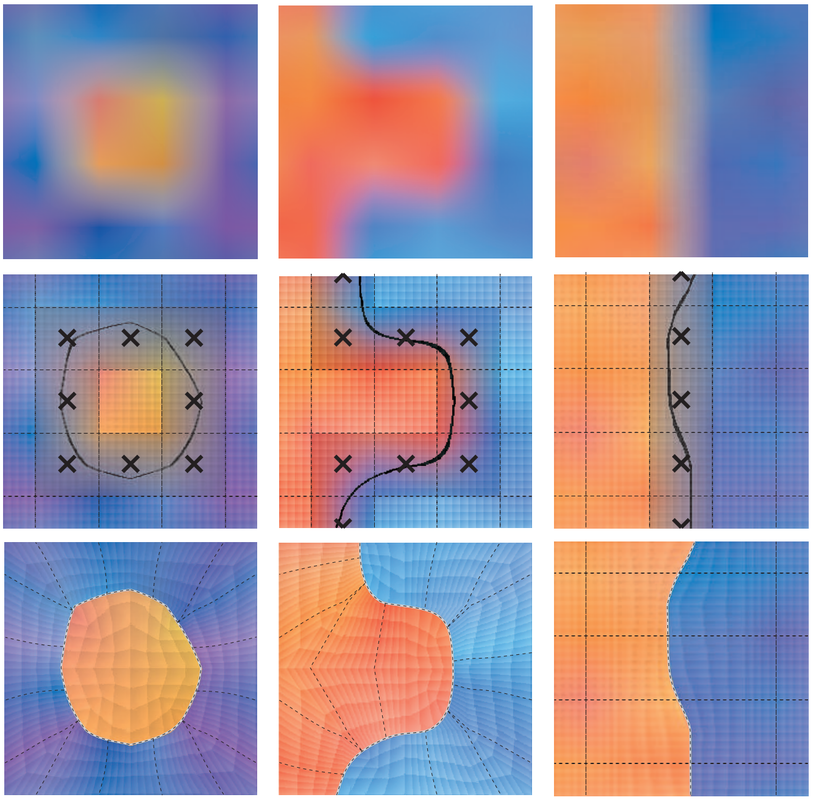

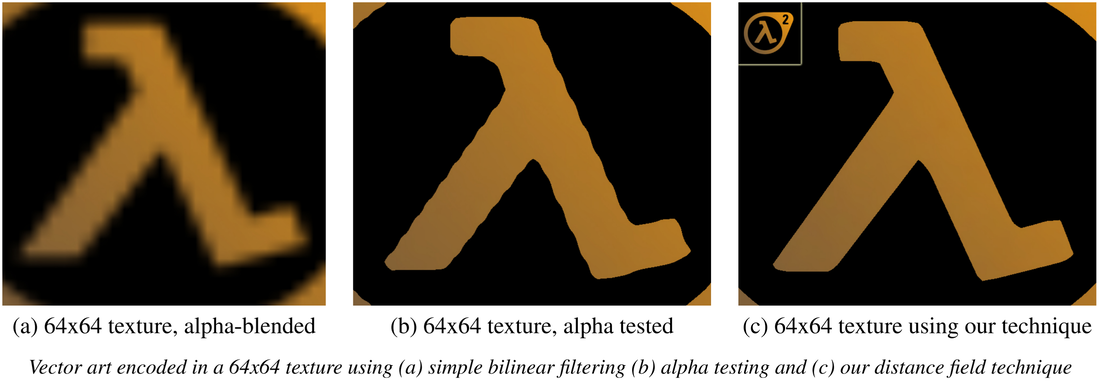

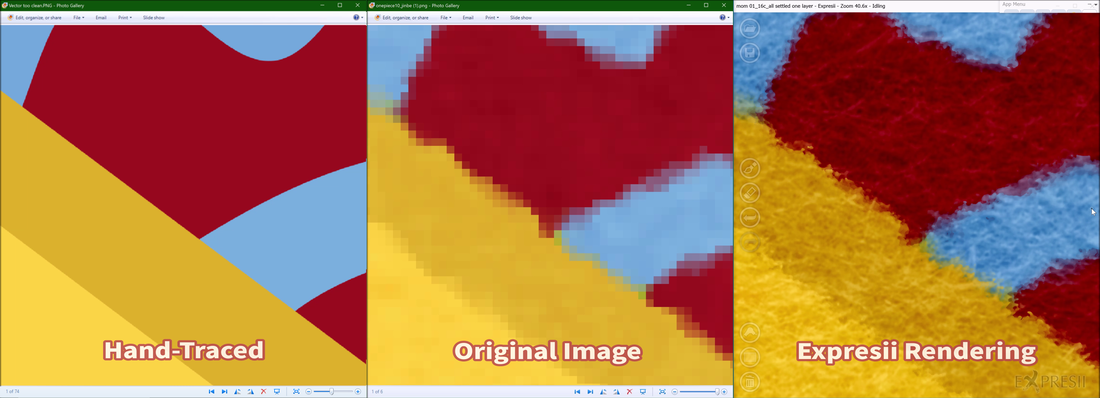

再次感謝CG Live 讓我們參與這次本地文創界盛事。也感謝HKHands 組織了這文創展!希望多點有心人,可以合力讓電腦書法跟水墨普及。一路走來不容易,請大家多多支持我們! Expresii's novel Vexel Rendering makes pixels zoom like vector! (」゜ロ゜)」 Imported watercolor image displayed at 20x zoom with Expresii's vexel rendering Introduction - Digital Illustrator's Dilemma: Raster or Vector?If you as a digital painter ever needed to print your artwork large, you know how important it is for your artwork to be in high resolution. For over 40 years, digital paint programs have mostly been raster-based and they treat pixels as squares - if you zoom into your artwork, you see big, fat pixels. If you don't want to see fat pixels or interpolation blur, the current solution is to use vector-based programs instead. The following is a real-world example of having insufficient resolution. It is obvious that the artist is trying to mimic watercolor in this painting, which was printed as a mural. It looks fine from a far, but when you walk close, you will notice the blurry interpolation. Trying to get the best of both worlds? Over the years, there have been several attempts to solve the dilemma of raster vs vector. One of them is Creature House Expression (first released in 1996; discontinued 2003), in which they allow mapping bitmaps onto vector strokes. Because those bitmaps are resolution-limited, such strokes would be blurry when rendered large. When largely deformed, stretched bitmaps also give unnatural look. Mischief (released 2013 ; discontinued 2019) claimed to provide you “the richness of pixel-based brushes AND the scalability of vectors”. Mischief is a vector program, but the vector strokes cannot be edited, at least in all the released incarnations. Like other pure-vector programs, it's not easy to get painterly without having too many vector strokes, which may bog down the system, and the raster look was achieved mainly using their airbrush-like brush that gives a dithered falloff effect or even just stacking semi-transparent strokes. Concepts (released 2012 ) is another vector drawing app, which also supports mapping pixel-stamps to vector paths, in the same vein as Expression's skeletal strokes. Strokes made in Concepts are editable (in the paid version). With their basic tools like the airbrush (similar to that of Mischief), it's possible to achieve what Mischief can do, namely smooth shades that give a traditional raster painting look. Further with pixel-stamp-mapped strokes, raster richness can also be achieved. However, such pixel stamp strokes still give interpolation blur when zoomed in.  Mingling vector & raster strokes in Adobe Fresco Mingling vector & raster strokes in Adobe Fresco Adobe's latest paint program Fresco (released 2019) allows both pixels and vectors in the same artwork (code-named 'Gemini' for 'the combination of pixels and vectors in a single app'). However, putting the two types of strokes together would make the whole artwork look incohesive when you zoom in on the image, with vector strokes being very sharp and raster strokes pixelated. The effective resolution is thus limited by that of the raster strokes. Their previous app Adobe Eazel (released 2011; discontinued 2015?) generated thousands of semi-transparent polygons to mimic raster richness but the result doesn't look nice due to the smooth, clean curves being far from organic. Affinity Designer (released 2014) also allows both pixel and vectors in the same artwork. They provide a better linkage between the vector and the raster personas by allowing clipping of raster strokes onto crisp vector shapes for texture or grain. They also allow pixel-mapped vector strokes. Whenever bitmap is involved, strokes would still pixelate or blur when zoomed in. HeavyPaint (released 2019) saves your artwork as stroke information and can re-generate the painting at a higher resolution, typically at 2x or 4x. Stroke paths being vector, this can be considered a hybrid raster-vector approach. In fact, we can regard all the vector-based apps to be a raster app that redraws the visible strokes when the user edits them or changes the view. Such apps need to be highly optimized to allow a large number of strokes and stay responsive. To the best of our knowledge, none of the apps out there can really give raster richness and ultra-high-res output at the same time. Previous Academic WorkTrying to improve the rendering quality of magnified bitmaps is surely not new. Here, let's compare our novel vexel rendering technique with other related methods that involve raster-to-vector conversion. Pixel-Art-Specific These stem from the need to render old video games from the 80's and early 90's on modern hardware. One notable work on this is the SIGGRAPH 2011 paper by Kopf and Lischinski. Very nice results are obtained, but the global nature of the algorithm makes it hard to be implemented on the GPU. Two years later Silva et al. published an algorithm on GPU to give real-time performance: These methods are specifically designed for very low-res images using heuristics on connected pixels and thus not suitable for digital painting at common canvas sizes. Embedding Explicit Edges Around 2004-2005, we saw several papers dealing with rendering discontinuity in sampled images. They include the Bixel (2004), the Silhouette Maps (2004), the Feature-based Textures (2004) and the Pinchmaps (2005). The main insight here is that the usual image interpolations (bilinear or bicubic) are bad for discontinuity. All these methods attempt to preserve sharp edges by encoding boundary information in the images. Earlier methods are not very fast because edge info derivation and utilization are complex. The later Pinchmap improves the run-time performance by removing the need to divide the situation in cases and thus can be rendered on the GPU very efficiently. However, time-consuming pre-processing is still required to derive the 'pinching' configuration and thus is still not suitable for real-time painting applications. Implicit-Function-Based Ray et al. 2005's Vector Texture Map was one early use of implicit function values to define discontinuity in glyphs to avoid evaluation of explicit curves on the GPU. Qin et al. 2006 later used Signed Distance Function (SDF) as the implicit function. These functions are evaluated on the fly and in a hierarchical manner for robustness. In 2007, Chris Green of VALVE showed us how they used SDF for rendering glyphs in a game environment. In such an application, they did not strive to render the glyphs exactly (like having sharp corners) so they can just use rasterized SDF values in a much simplified way, which runs well even on low-end graphics hardware. Note that all these work well only if you are rendering binary-masked glyphs. SIGGRAPH 2010 paper Vector Solid Textures uses SDF to generate solid texture, but the encoding requires complex pre-processing and is still limited by the non-overlapping-region requirement. To allow overlapping regions, more SDF are needed thus more storage and processing. To our knowledge, no one has found a way to use implicit function to represent full-color overlapping regions efficiently yet. Our solution: Vexel RenderingIs it actually possible to have raster richness and be able to scale it much larger? I think we found a solution, at least for the case of organic, natural-media digital painting. Specifically, our goal is to render our artwork as if done on real paper*. We perform such magic using a shader program. A shader is a program that runs on the GPU and calculates the final image output. Your image data may be stored in an array, but not in the traditional 'pixel' sense. Each slot stores color or other attributes and it's then up to the shader on how to render the final image from such information. In a way, you can consider the traditional pixels as your final image discretized and most other paint programs display them as colored squares tiled to give the final image. Our shader, on the other hand, takes the stored information and generates the final image. Our vexel rendering works well for our paint simulation output, ordinary raster illustrations, and photos of watercolor artwork. For examples, the following video taking a sample illustration from irasutoya as input and we show that you can still add our simulated strokes to it. The first demo image in this article is a photo of real watercolor marks as input. * We will try to extend our program to render other paint media like oil paint later. Raster RichnessOur paint information is stored in an array allowing raster richness. In fact, our Expresii simulates watercolor/ink flow giving rich, organic outcome unmatched by any other app. On top of that, we render the artwork as if it was done on a real piece of paper, showing details up to paper-fiber level. Due to its raster nature, you can add infinite number of strokes to the page without bogging down the system like in conventional vector programs. Raster operations like blurring or smudging are possible. Such operations would be difficult in a pure-vector program. Vector ScalabilityBecause the outcome is generated, our final output is flexible on its resolution. Our current maximum output resolution is limited by our raster paper texture, which becomes blurry if we go beyond 30x zoom. Given new tools like Material Maker, we can design substrate textures using shaders so it's possible that in future version of Expresii, we can replace the paper texture with one that is resolution independent. Comparison between raw pixels and vexel rendering at 50x zoom At moderate zoom like 20x, our rendered image looks like real non-volumetric paint marks on paper. By varying the shading from paper texture, we can emulate watercolor/ink soaked into the paper fibers, or crayon/pencil marks laid on the paper surface. At very high zoom like 100x, our rendering reveals curved shapes (see the first image of this article), which look like paper cutouts. Our vexel rendering can smoothly transit from the look of ordinary raster image to shaded vector shapes that integrate with the paper substrate very nicely. Tailor-made for our needAs shown in the above image, traditionally vectorized images are too clean, too sterile. Current auto-trace results tend to give you simple geometric shapes (like the left part of the above figure), which are not a good representation of textural details like those from watercolor marks. Traditional raster digital painting are good at capturing details but they usually cannot be as large as 16k x 16k pixels, unless your system is beefy enough and that your app does support such a large canvas (popular iPad app Procreate's max size is 8k x 8k or equivalent, as of Oct 2021). In comparison, our current Youji 2.0 rendering engine can output very nice textural details up to around 40x magnification, and shaded vector shapes at 500x. We also don't need any model training like in those AI-based approaches that give you a 2x to 8x magnification at non-interactive rate. Everything is local and instant. For organic digital painting, we actually prefer our vexel rendering over the simple curves resultant from image tracers of existing software tools, which give a flat, planar look. You can tune your image tracer to output more polygons like in the above figure but it is still not easy to get as detailed as our vexel rendering. A Paradigm shift Existing vector programs are great for creating graphics comprised of clean lines or shapes, and if you are after such graphics, by all means you should use those tools. On the other hand for digital painting, one major goal among many paint programs is to give a natural-media look. It seems we are stuck with the thought that vector primitive should be simple, clean curves and thus results from current raster-to-vector conversions still look bland. Here, we render the raster data as 'vexels' that allow huge magnification while keeping all textural details. ConclusionExpresii is the first digital painting app that can really give both raster richness and ultra-high-res output scaling, thanks to its novel GPU-based simulation and rendering algorithms. Many artists used to traditional media do not like digital counterparts because digital paintings get pixelated when zoomed in on. We believe Expresii has largely fixed this issue and hope more artists are willing to go digital. Our vexel rendering is not a general solution to the problem of image interpolation, since our method rely on the fact that for many natural media there is a substrate to give grain texture. Currently Expresii only simulates water-based art media. We plan to add other media like pastel and oil in the near future. Stay tuned. Update: The following video shows the use of 'vexel rendering' in actual painting and that we are able to export 32k images thanks to our ultra-zooming capability: Why the term 'vexel'

When developing new tech to avoid seeing fat pixels in digital painting, I wanted to give it a name that suggests it's a combination of vector and pixel, and I came up with 'vexel'. Later I found out 'vexel' is already coined by Seth Woolley since at least 2006 to refer to raster images that look like vector graphics. I tried to find another name, but couldn't find one giving the same level of meaningfulness. So, I decided to stick with 'vexel'. After all, our rendering does look like it's vexel art in Seth's definition. Earlier this year in April, our Dr. Nelson Chu was invited to give a talk at the ACAS Inaugural conference. As you may know, Expresii has been used in the production of a few animated shorts and the ink-painting-styled feature animation Red Squirrel Mai (although the production of Mai may be halted). Here we'd like to elaborate more on the questions raised during the conference for Nelson: Q: From your lecture, we have seen the many possibilities of traditional ink painting in digital art. I think it's a combination of traditional art and computer skills, as well as a deep understanding of animation. How do you manage the relationship between them? A: Hiring multi-talented people can help bridge the gaps. I believe they have a higher chance solving problems that have not been solved before. I have been lucky to have some tech-savvy and self-motivated artists to work with. For instance, my friend Shuen Leung, who has been using Expresii since its early versions, can produce nice work without me giving much instruction. Whenever I have some new functionality added to the program, she would just explore it and see what she can come up with it. Our (then) director Angela Wong, soon recognized Shuen's abilities and invited her to join the production team. Having Shuen in the team had been a privilege. She's also a kung fu practitioner, who can inform us on the kung fu moves that would appear in the Mai movie. In my role, I simply try my best to fulfill the program feature requests from the directors. They are the actual ones managing different people in the production team. I can't speak for them, but from what I've observed, putting people in the right roles would definitely help make things smooth. Q: How can experts in different fields work well together? A: Mutual respect and good communication are important. Try understand others' point of view and/or the constraints they have. For example, many of us have opinions on how ink-painting-styled animation should be like. We may offer our views and in fact, there has been some heated discussions. Ultimately, it would be the film director to decide. For the production to go on, we let those in the managing positions do their jobs. As a decision maker, one has to consider all the opinions and make appropriate choices. It surely is not easy.  Q: Is there an inherent contradiction between the serendipity of ink painting and the standardization resulting from computer algorithm or automation? A: I actually don't see a contradiction here. What you do depends on the project at hand. As an artist in general, I think serendipity is great and that's mainly why I started building my own tool for brush painting. But for animation production, we hire many animators to work on the same film. We need standardization (e.g. style guide) so that the resultant animation looks coherent. Another reason for standardization is that we want to be able to control the amount of flickering between frames due to stroke differences. Some say, "if it's repeatable, it's not art". But here you actually want the strokes to be repeatable with desired parameters! ^_^ Imagine, when you're half way done with your frames, your art director comes to you and asks, “Can you make the tentacles of the octopus a bit thicker?” If your strokes are auto-generated instead of hand-painted, you can simply change the parameters and have the computer re-render it! Q: How do you see the future of ink-painting-styled animation (水墨動畫) ? A: Previously, only large studios can afford to do research work. They develop new algorithms for, say, simulating animal fur to support their story telling. Nowadays, more individuals are learning programming, and one can come up with new algorithms specially designed for ink-painting-styled animation so that they don't need to rely on existing software, which may not be suitable for the effects they are after. That said, if some party is determined to invest on new ink-painting-styled animation, they still need to be patient, as developing and putting tailor-made new solution into a workable pipeline usually require a lot of experimentation. The good news is that Angela Wong has prototyped a workable solution that is already used in the animated short Find Find (as discussed in my talk). We just need to further develop it and put it to the test more. Let us know if you're interested to join this journey. Q: How can artists or creatives get more involved in computer programming or on the technological side? A: They can learn programming online for free. Just do a search on creative coding and you will find a bunch of tutorials and samples. I think that's exactly how Angela Wong learned programming. I believe you will be easily inspired to use code to do your next project! Q: A really interesting point was bought up about how technology might be infiltrating art and calligraphy. On that note, what are your opinions on using Artificial Intelligence (AI) for storyboarding and animation? Do you think it will enhance our experiences or just make us obsolete? A: Current state-of-the-art AI needs a large number of samples as input for it to be able to learn some pattern in the samples and apply that same pattern to perform certain tasks. That means it can not be creative all by itself yet. Computers are very good at optimization. If you have well-defined goal or cost function, computer programs can find the optimal configuration that minimizes that cost. I think the general public's view towards AI may be skewed by movies or fictions, thinking that AI can already think almost like a human. No, we're still quite far from that. And, speaking of AI, beware there're some opportunists trying to fool you with fake AI! AfterwordWhen Daisy Du of ACAS first contacted me in 2019, I'm a bit surprised that there is an academic association dedicated to Chinese animation studies. I'm glad to see such an enthusiasm towards ink-painting-styled animation from humanity scholars. I hope more resources can be given to accelerate modern development of CGI ink-painting-styled animation.

Here, I'd like to show you a 2018 tweet by a Japanese CG artist commenting on the lost tech of ink painting animation (水墨画によるアニメーション): "When would a hero using Expresii to make animation appear?" |

Expresii 寫意Previous Posts

|

RSS Feed

RSS Feed